GSTR:

Advancing Human-Computer Interaction through Facial Gesture Recognition

Introduction

In the ever-evolving landscape of human-computer interaction (HCI), the need for intuitive and accessible input methods has never been more pressing. Traditional input devices such as keyboards and mice, while effective for many users, can present significant challenges for individuals with limited hand mobility. Enter GSTR (Gesture and Speech Tools for Input Recognition), an innovative solution that leverages facial gestures and voice commands to revolutionize how we interact with our computers.

Created by conducting user interviews for the target users (People with Disabilities), performing requirement analyses and iterative development loops, GSTR takes in customized semantic gestures, and let’s users control their PCs with relative ease, reducing times for simple functionalities by orders of magnitude.

Technical Overview

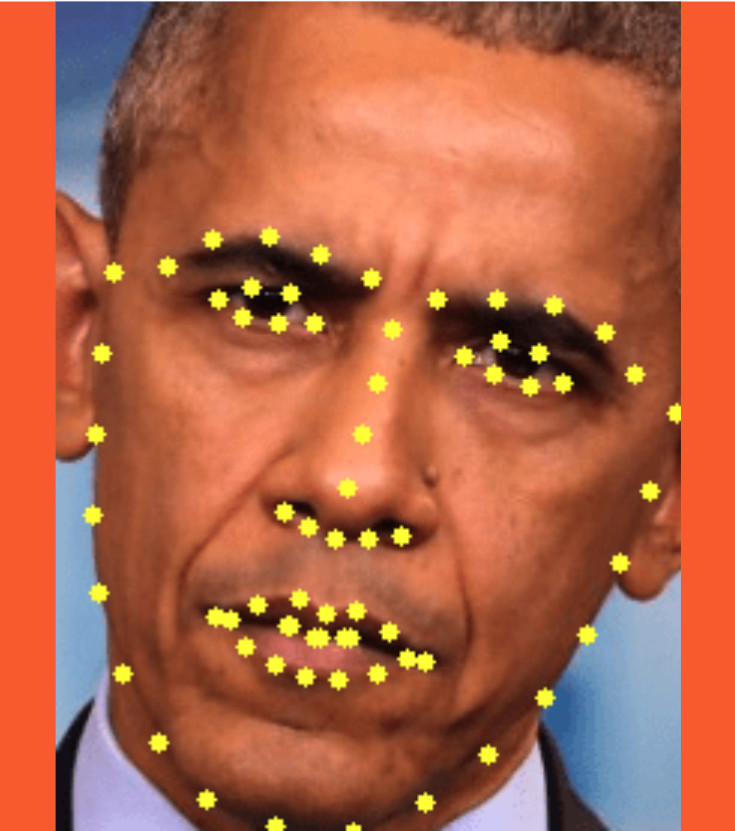

GSTR is built on a robust foundation of computer vision and machine learning techniques. At its core, the system utilizes a facial landmark detection model capable of identifying 68 distinct points on a user’s face. This granular facial mapping allows for precise tracking of various facial features, enabling a wide range of gesture-based inputs.

Key Components

-

Face Detection: The system employs Dlib’s HOG-based face detector to locate faces within the video stream.

-

Facial Landmark Prediction: Once a face is detected, a pre-trained shape predictor model (

shape_predictor_68_face_landmarks.dat) is used to identify and track the 68 facial landmarks. -

Feature Extraction: The system calculates several key metrics:

- Eye Aspect Ratio (EAR)

- Mouth Aspect Ratio (MAR)

- Jaw Open Ratio (JOR)

- Eyebrow Raise

- Smile Ratio

-

Gesture Recognition: By analyzing changes in these metrics over time, GSTR can recognize various semantic gestures, such as:

- Blinking (single and double)

- Mouth opening

- Eyebrow raising

- Smiling

-

Input Mapping: Recognized gestures are mapped to specific computer inputs, such as:

- Mouse movement (based on nose position)

- Left and right clicks

- Scrolling

- Anchor point resetting

-

Voice Integration: While not fully implemented in the provided code snippet, the system is designed to incorporate voice commands for additional functionality.

Implementation Details

The GSTR system is implemented in Python, leveraging several key libraries:

- OpenCV (cv2) for video capture and image processing

- dlib for face detection and facial landmark prediction

- imutils for convenient image processing functions

- PyAutoGUI for programmatic control of the mouse and keyboard

The main processing loop captures video frames, detects faces, and extracts facial landmarks. It then calculates the various facial metrics and uses these to determine if specific gestures have been performed.

Gesture Detection Algorithms

-

Blinking Detection: The system tracks the Eye Aspect Ratio (EAR) and detects rapid changes that indicate blinks. It can distinguish between single and double blinks based on the timing between blink events.

-

Mouth Opening: The Jaw Open Ratio (JOR) is used to detect when the user opens their mouth wide. This gesture toggles the scroll mode.

-

Eyebrow Raising: By comparing the positions of the eyebrows relative to the eyes and jaw, the system can detect when the user raises their eyebrows. This gesture resets the anchor point for mouse movement.

-

Smiling: The system calculates a smile ratio based on mouth shape and triggers a right-click when a smile is detected.

Mouse Control

Mouse control is achieved by tracking the movement of the user’s nose relative to an anchor point. The system calculates the direction and magnitude of this movement and translates it into corresponding mouse movements on the screen.

Challenges and Optimizations

Developing GSTR presented several technical challenges:

-

Precision vs. Sensitivity: Balancing the system’s responsiveness with the need to avoid false positives required careful tuning of thresholds for each gesture.

-

Performance Optimization: Real-time processing of video frames and facial landmark detection is computationally intensive. Optimizations were necessary to ensure smooth operation, including:

- Using a reduced resolution for video capture

- Implementing efficient numpy operations for metric calculations

- Limiting the facial region of interest to reduce processing overhead

-

Calibration: To account for variations in facial structures and camera setups, the system includes mechanisms for setting anchor points and adjusting sensitivity.

Future Enhancements

While GSTR already provides a powerful set of interaction capabilities, there are several areas for future enhancement:

-

Customizable Gesture Mapping: Allowing users to define their own gestures and map them to specific actions.

-

Advanced Voice Integration: Fully integrating voice command processing for a more comprehensive hands-free experience. Recent LLMs would align perfectly with this.

-

Adaptive Calibration: Implementing an automatic calibration system that adapts to changes in lighting conditions and user positioning.

Conclusion

GSTR represents a significant step forward in accessible human-computer interaction. By leveraging the power of computer vision and facial gesture recognition, it opens up new possibilities for users with limited mobility and paves the way for more natural and intuitive computer interfaces. As we continue to refine and expand this technology, we move closer to a future where our computers truly understand and respond to our most subtle expressions.